Hard Margin Support Vector Machines

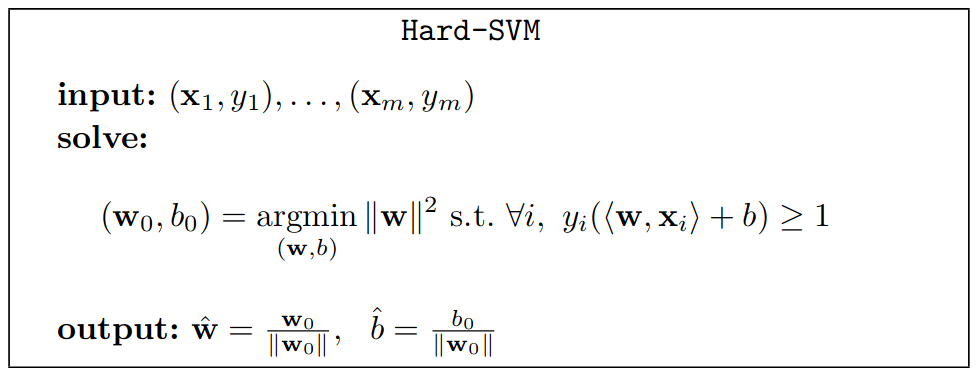

The most basic type of Support Vector Machine is known as a Hard Margin SVM.

A Hard Margin SVM tries to find a line that both separates the data, but is also as far away as possible to the points closest to it. The points closest to the line are known as Support Vectors and the lines parallel to the optimal line that pass through the support vectors form a margin.

On the plot below you can see the most optimal classifying line as well as the margin lines and the support vectors (the larger points). Try adding points in different locations to see how they affect the SVM.

Click to add an orange point.

Shift + Click to add an blue point.

What you should have seen is that if you add a point ouside the margin, the line that divides the different colours won't change. This makes sense since the points aren't support vectors. If you add points inside the margin, the SVM has to recalculate the classifying line so that is stays as far away from all the points.

If you added an orange point in the blue cluster then you will see that the the SVM fails to classify everything correctly since the orange ones you added are on the blue side of the line. The SVM makes its best attempt to come up with a valid classifier but it is skewed by the points that are not in the correct cluster. This is because, a Hard Margin SVM can only successfully classify linearly separable data. This is data where at least one line exists that can split the data into the two catagories.

What about data that's not completely Linearly Separable?

If we have data that looks like it could be split into two roughly correct catagories using a line despite the data not being linearly separable, does that mean we can't use an SVM? To get an accurate classifier for that data, we cannot use a Hard Margin SVM. Instead we have to use another type of Support vector machine that is more tolerant of errors known as a Soft Margin SVM.